AI: Difference between revisions

No edit summary |

No edit summary |

||

| (18 intermediate revisions by the same user not shown) | |||

| Line 5: | Line 5: | ||

Revision: 260111 | Revision: 260111 | ||

This is an article about "AI". The reading level for this article is High School to adult. An interest in business will help. | This is an article about "AI". The reading level for this article is High School to adult. An interest in business will help. Most of the illustrations are by GenAI, one type of AI. GenAI is discussed further below. | ||

The thesis is that "AI" in its current form is, in key respects, not real and that the current "AI" era is a mania. This article is slightly unusual in that I asked AIs to rebut those points. Surprisingly, AIs seem to agree with much of what I've said. | |||

To be clear, AI is important and a part of the future. The discussion here is related to the current frenzy. We'll edit the master copy of this document to reflect feedback. The master copy is located temporarily at: | |||

https://wiki.minetest.org/AI | |||

The master copy may move to a different site. | |||

---- | ---- | ||

| Line 17: | Line 23: | ||

AI is presently a mania similar to the dot-com mania or the Dutch Tulip mania. Or crypto, though that didn't go so far. It isn't real. This doesn't mean that there won't be AI in the future. It does mean that the current AI frenzy will collapse first. | AI is presently a mania similar to the dot-com mania or the Dutch Tulip mania. Or crypto, though that didn't go so far. It isn't real. This doesn't mean that there won't be AI in the future. It does mean that the current AI frenzy will collapse first. | ||

For | For an introduction to manias of this type, read the article linked below: | ||

https://wiki.minetest.org/misc/manias.html | https://wiki.minetest.org/misc/manias.html | ||

| Line 23: | Line 29: | ||

---- | ---- | ||

I asked an AI about this. The AI pointed that Dutch Tulips had no | I asked an AI about this. The AI pointed that Dutch Tulips had no intrinsic value, but the AI itself was able to do things. This is true, but the point is that we're in an AI mania, not that AI has no value. | ||

The heart of the AI mania is the idea that businesses are going to be able to replace people en masse, large parts of the work force, with software. Business profits will skyrocket after that because you don't need to pay software a salary. The idea is | The heart of the AI mania is the idea that businesses are going to be able to replace people en masse, large parts of the work force, with software. Business profits will skyrocket after that because you don't need to pay software a salary. The idea is attractive to CEOs. They want to believe it and so they'll continue to believe it for as long as possible. | ||

At the same time, the people who have been fired are supposedly going to rush to pay hundreds | At the same time, the people who have been fired are supposedly going to rush to pay hundreds of dollars per month on AI services that aren't even defined yet. | ||

'''''"Food and rent can wait. Must pay for AI !"''''' | '''''"Food and rent can wait. Must pay for AI !"''''' | ||

That side of the picture is referred to as Business-to-Consumer or B2C sales. AI firms hope to profit from Business-to-Business or B2B sales as well. | |||

B2B sales are going to happen and they might be significant. Mass replacement of the workforce and mass B2C sales, not so much. There will be progress, from the AI business perspective, at all three levels over time, but not a societal transformation. | |||

I asked an AI to comment on this position. The AI responded, '''''"This perspective aligns with a significant portion of current expert analysis and market trends as of early 2026."''''' | |||

---- | ---- | ||

| Line 73: | Line 83: | ||

The AI mania started in part due to the belief that the power of AI software was going to "scale". "scale" meant that adding hardware would improve AI performance proportionately and that this would continue long enough to produce human-equivalent AIs or "AGIs". Note: AGI is short for Artificial General Intelligence. | The AI mania started in part due to the belief that the power of AI software was going to "scale". "scale" meant that adding hardware would improve AI performance proportionately and that this would continue long enough to produce human-equivalent AIs or "AGIs". Note: AGI is short for Artificial General Intelligence. | ||

Scaling has happened, but net improvements have declined over time. There is no reason to believe that scaling from current levels will produce massive improvements in AI. | |||

Additionally, AGI based solely on the two current focus areas of LLM and GenAI isn't even '''possible.''' | Additionally, AGI based solely on the two current focus areas of LLM and GenAI isn't even '''possible.''' | ||

| Line 93: | Line 103: | ||

This is a genuine and intractable problem for businesses. | This is a genuine and intractable problem for businesses. | ||

Fast Food chains are using LLMs to take orders. The LLMs do creative things with orders. Customers are displeased. Law firms are using LLMs to replace paralegals. The LLMs are inventing random case citations. That has gone poorly for the law firms in Court. | Fast Food chains are using LLMs to take orders. The LLMs do creative things with orders. Customers are displeased. Law firms are using LLMs to replace paralegals. The LLMs are inventing random case citations. That has gone poorly for the law firms in Court. In fact, some of the firms have been sanctioned. | ||

In one | In one minor but striking example, a major airline ran an ad that featured a smiling stewardess. Despite the fact that Marketing had presumably looked at the ad before publication, she had, of course, an extra arm. | ||

The supposed cure for AI hallucinations is to have a human look carefully at every output produced by an AI. But this is expensive, manual corrections are expensive, and things are always going to slip by. | The supposed cure for AI hallucinations is to have a human look carefully at every output produced by an AI. But this is expensive, manual corrections are expensive, and things are always going to slip by. | ||

| Line 101: | Line 111: | ||

---- | ---- | ||

I asked an LLM about this. The LLM defended itself as follows: ''"While the output might appear random or nonsensical to a human, it's not truly random in a statistical sense."'' That isn't much of a defense. | I asked an LLM about this. The LLM defended itself as follows: '''''"While the output might appear random or nonsensical to a human, it's not truly random in a statistical sense."''''' That isn't much of a defense. | ||

---- | ---- | ||

| Line 123: | Line 133: | ||

---- | ---- | ||

3. Racial or other types of group hatred | 3. Racial or other types of group hatred bubble up at times from what you can think of as an LLM's subconscious. | ||

LLMs may start to talk, out of the blue, about Blacks or other minorities in ways that are awkward for businesses. For example, if a customer's name sounds "Black", an LLM may ask the customer if he or she would like watermelon and fried chicken as part of a business deal. | LLMs may start to talk, out of the blue, about Blacks or other minorities in ways that are awkward for businesses. For example, if a customer's name sounds "Black", an LLM may ask the customer if he or she would like watermelon and fried chicken as part of a business deal. | ||

| Line 145: | Line 155: | ||

Regardless of where a company stands on political issues, this isn't the sort of thing that it wants LLM Sales or Support to be saying. | Regardless of where a company stands on political issues, this isn't the sort of thing that it wants LLM Sales or Support to be saying. | ||

The LLM lack of boundaries issue is slightly less problematic than the LLM hallucinations issue. Filters can be placed between LLMs and the outside world to hide certain types of statements. Researchers are looking, as well, for ways to prevent harmful tendencies from getting into the LLM subconscious level to begin with. | |||

This said, for the time being, LLMs are going to find ways at times to praise Hitler or to tell children to kill themselves. It's surprising that this issue is taken | This said, for the time being, LLMs are going to find ways at times to praise Hitler or to tell children to kill themselves. It's surprising that this issue is taken as lightly as it is in discussions of AIs in public-facing roles. | ||

I asked an LLM about the lack of boundaries issue. It seemed to agree with the preceding analysis. | I asked an LLM about the lack of boundaries issue. It seemed to agree with the preceding analysis. | ||

| Line 205: | Line 215: | ||

The natural resources issue includes dramatically high water usage in data center areas, the rapid destruction of plant and animal habitats on a large scale, and the gobbling up of huge quantities of raw materials such as rare earth elements and other minerals. | The natural resources issue includes dramatically high water usage in data center areas, the rapid destruction of plant and animal habitats on a large scale, and the gobbling up of huge quantities of raw materials such as rare earth elements and other minerals. | ||

There will be second-order effects such as supply chain issues that affect ordinary consumers | There will be second-order effects such as supply chain issues that affect ordinary consumers. This part is already happening. Prices for RAM and related products such as laptops are soaring. Small businesses that depend on chips of different types are being forced to cut back on production and even to fire people. | ||

U.S. citizenry is mobilizing to block the development of some of the new data centers. Whether or not the efforts are successful, the data center issues listed here are likely to be contentious for decades to come. | U.S. citizenry is mobilizing to block the development of some of the new data centers. Whether or not the efforts are successful, the data center issues listed here are likely to be contentious for decades to come. | ||

| Line 224: | Line 234: | ||

== Consumer-Side Business Models == | == Consumer-Side Business Models == | ||

One driver for the AI mania is the idea that some businesses will be able to replace many of their workers with unpaid AIs. However, other companies are hoping to sell AI services after that to the workers who have been fired. I.e.: AI is seen as a way to juice people into profit at two different levels. | |||

I asked an LLM to comment on the preceding. The LLM said: ''The statement presents a highly simplified and somewhat cynical view of the motivations behind the current AI boom.'' It didn't contest the essential truth of the statement. | I asked an LLM to comment on the preceding. The LLM said: ''"The statement presents a highly simplified and somewhat cynical view of the motivations behind the current AI boom."'' It didn't contest the essential truth of the statement. | ||

---- | ---- | ||

| Line 232: | Line 242: | ||

AI services will typically be sold on a subscription basis. | AI services will typically be sold on a subscription basis. | ||

AI service firms are already offering limited | AI service firms are already offering limited access for free or at little cost. In most cases, this is at a financial loss that isn't sustainable for large numbers of users. The plan is therefore to get people signed up and presumably hooked and then to enshittify the services. | ||

Enshittification is a term coined by Cory Doctorow. It means, to increase costs and reduce quality of services once people are signed up. It's a common practice in the modern world. | "Enshittification" is a term coined by Cory Doctorow. It means, to increase costs and reduce quality of services once people are signed up. It's a common practice in the modern world. | ||

---- | ---- | ||

| Line 256: | Line 266: | ||

Niche AI services are unlikely to catch on in large volumes, regardless, for two simple reasons: | Niche AI services are unlikely to catch on in large volumes, regardless, for two simple reasons: | ||

1. In most instances, the results for existing consumer AI services are mediocre at best. Manual effort is required to fix them. Due to the hallucination issue and for other reasons I don't expect this to improve much in the short term. | 1. In most instances, the results for existing consumer AI services are mediocre at best. Manual effort is required to fix them. Due to the hallucination issue, and for other reasons, I don't expect this to improve much in the short term. | ||

2. Relatively few of the niche AI services that exist, or that are going to exist, are going to be services that a large number of people will be willing to pay monthly or annually for. Even at low rates. Let alone at the high rates that these services are going to need to get. | 2. Relatively few of the niche AI services that exist, or that are going to exist, are going to be services that a large number of people will be willing to pay monthly or annually for. Even at low rates. Let alone at the high rates that these services are going to need to get. | ||

| Line 266: | Line 276: | ||

In addition to all of this, a number of major firms have been trying for years to force people to use AI in contexts where it isn't needed or welcome. | In addition to all of this, a number of major firms have been trying for years to force people to use AI in contexts where it isn't needed or welcome. | ||

Microsoft "Copilot", I'm talking to you. Copilot is like Clippy come back to life | Microsoft "Copilot", I'm talking to you. Copilot is like Clippy come back to life. However, now he steals your personal work and resells it. Which is the next point. People are increasingly aware of the fact that commercial AIs steal what that you put into them. | ||

---- | |||

A Smart Toilet was announced at CES 2026. It watches what you do on the toilet and analyzes it. It isn't AI, as far as I know. Would you really want AI in this device or in other personal devices or on your laptop, waiting to send every detail of your life -- either on or off the toilet -- to corporations? | |||

It isn't hyperbole. Readers are invited to type the words '''vibrator spyware''' into Google and see what turns up. And what '''*that*''' will show you is nothing compared to what corporations are going to do with AI. | |||

---- | ---- | ||

A | Consumers, too, are increasingly aware of the mediocre or derivative or hollow results that AI presently produces and of the lack of relevance to their daily tasks and lives. | ||

It's true that some software developers and architects, writers, and other creative workers have started to build workflows that AI enhances significantly. A counterpoint to that is that many of these people were frustrated and even angry when OpenAI updated Chat-GPT from release 4 to release 5. The users were supposed to embrace the update. The actual reactions are food for thought related to the future of AI business models. | |||

More typical people see the often pointless AI content that is flooding YouTube and other venues as "AI slop". The term "AI slop" is overused and is sometimes unfair. However, the fact is that AI content is increasingly unwelcome in both technical and ordinary venues. | |||

Daniel Stenberg, the developer of curl, one of the most important FOSS projects in existence, is now simply banning reports from people who submit "AI slop". Stenberg says, '''''“We still have not seen a single valid security report done with AI help".''''' | |||

I myself have been the subject of concerted online attacks as a response to the fact that I've used GenAI illustrations as clip-art in online posts. Some people really, really don't like AI. | |||

But the anecdote that seems most telling is something that a mother who posted in a Fediverse thread mentioned in Fall 2025. She said that her 9-year-old son and his friends had started to use the word "AI" to mean "fake". To dismiss nonsense, the next generation says '''''"Oh, that's AI".''''' | |||

---- | |||

Firms that would like to sell AI products or services or to put them into devices will need to demonstrate that the AI is actually needed. They will also to need to build a level of trust that will be difficult to come by. | Firms that would like to sell AI products or services or to put them into devices will need to demonstrate that the AI is actually needed. They will also to need to build a level of trust that will be difficult to come by. | ||

I asked an LLM about the preceding. I was surprised to see that the LLM agreed, up to a point, with every point. | |||

---- | ---- | ||

[[File:260111-clippy-clipped.jpg|alt=clippy]] | |||

== In Closing == | == In Closing == | ||

Future AI is inevitable. Present AI is bad. | Future AI is inevitable. Present AI is bad. | ||

Present AI is not about a new paradigm, a new economy, or a new era in which all is transformed. The proper technical term for that vision is "nonsense". | |||

Present AI is a frenzy of greed and a mania of unprecedented scale. Just as with crypto and other manias, investing prematurely in the sector may lead to riches or to rags. | |||

== About this website == | == About this website == | ||

| Line 290: | Line 320: | ||

This is a MediaWiki website. For MediaWiki details, [[Wiki_Admin|click here.]] Contact information for the website is at [[Contact|this link.]] Site Notices are at [[Site_Notices|this link.]] | This is a MediaWiki website. For MediaWiki details, [[Wiki_Admin|click here.]] Contact information for the website is at [[Contact|this link.]] Site Notices are at [[Site_Notices|this link.]] | ||

(end of | (end of document) | ||

Latest revision as of 11:30, 11 January 2026

AI is Fake For Goodness Sake

Author: The Old Coder aka Robert Kiraly

License: Creative Commons BY-NC-SA 4.0 International

Revision: 260111

This is an article about "AI". The reading level for this article is High School to adult. An interest in business will help. Most of the illustrations are by GenAI, one type of AI. GenAI is discussed further below.

The thesis is that "AI" in its current form is, in key respects, not real and that the current "AI" era is a mania. This article is slightly unusual in that I asked AIs to rebut those points. Surprisingly, AIs seem to agree with much of what I've said.

To be clear, AI is important and a part of the future. The discussion here is related to the current frenzy. We'll edit the master copy of this document to reflect feedback. The master copy is located temporarily at:

The master copy may move to a different site.

These are the take-aways up front:

AI is here to stay. It'll play a role in your life in the future. However, as of 2026, most of the over-the-top AI predictions that you see in the media are simply wrong.

AI is presently a mania similar to the dot-com mania or the Dutch Tulip mania. Or crypto, though that didn't go so far. It isn't real. This doesn't mean that there won't be AI in the future. It does mean that the current AI frenzy will collapse first.

For an introduction to manias of this type, read the article linked below:

https://wiki.minetest.org/misc/manias.html

I asked an AI about this. The AI pointed that Dutch Tulips had no intrinsic value, but the AI itself was able to do things. This is true, but the point is that we're in an AI mania, not that AI has no value.

The heart of the AI mania is the idea that businesses are going to be able to replace people en masse, large parts of the work force, with software. Business profits will skyrocket after that because you don't need to pay software a salary. The idea is attractive to CEOs. They want to believe it and so they'll continue to believe it for as long as possible.

At the same time, the people who have been fired are supposedly going to rush to pay hundreds of dollars per month on AI services that aren't even defined yet.

"Food and rent can wait. Must pay for AI !"

That side of the picture is referred to as Business-to-Consumer or B2C sales. AI firms hope to profit from Business-to-Business or B2B sales as well.

B2B sales are going to happen and they might be significant. Mass replacement of the workforce and mass B2C sales, not so much. There will be progress, from the AI business perspective, at all three levels over time, but not a societal transformation.

I asked an AI to comment on this position. The AI responded, "This perspective aligns with a significant portion of current expert analysis and market trends as of early 2026."

The AI mania is mostly about two types of software: LLM and GenAI. Neither of those is actually AI or "artificial intelligence". Both of them have serious problems.

So, you should expect AI as a useful tool to happen. But AI as a transformation comparable to the arrival of God on Earth, not so much. It'll be more like the fact that most people started to use websites and smartphones over time.

There will be an AI crash or at least a pullback. Useful parts of the AI ecosystem will survive just as happened with the dot-coms after the 1990s. The rest will be gone just as most of the original dot-com companies are gone.

The rest of this article discusses the problems with LLM and GenAI.

Some AI Basics

This section is AI Basics 101. If you don't need to know how AI works, you can skip this part.

A brain is made of billions of cells called neurons in addition to other types of cells. Each neuron is a small analog computing unit. Analog means that things are just approximate and not exact like in digital computers. A neuron takes multiple inputs and processes them to generate an output.

A "neural net" is a toy version of a set of neurons written in code. It's only a toy. Real neurons are far more complex.

If you know a programming language such as 'C' or Python, you can code a simple neural net fairly easily. You don't need a college degree or special training. I did this myself for my honors thesis 45 years ago.

Commercial neural nets are more advanced these days, though not at the level of real neurons. However, the core principles of operation have remained largely the same over the decades.

In short, the magic isn't in the code but in data such as books and pictures that is fed into the code and ground up into what you can think of as data soup.

The books and pictures are still there. However, they are in bits and pieces that are mixed together and scattered about. If you know what a hologram is, the data soup is very similar to a hologram.

The AI furor that is in the news is mostly about "LLM" and "GenAI". LLM and GenAI are two types of software that are based on neural nets.

LLM is designed to write text or code in text. The term "LLM" stands for Large Language Model. GenAI is designed to make pictures or music or other types of media. The term "GenAI" stands for Generative AI.

All Hail AI Scale

The AI mania started in part due to the belief that the power of AI software was going to "scale". "scale" meant that adding hardware would improve AI performance proportionately and that this would continue long enough to produce human-equivalent AIs or "AGIs". Note: AGI is short for Artificial General Intelligence.

Scaling has happened, but net improvements have declined over time. There is no reason to believe that scaling from current levels will produce massive improvements in AI.

Additionally, AGI based solely on the two current focus areas of LLM and GenAI isn't even possible.

LLM and GenAI are similar to parrots though on a grand scale. "Polly wants to drink Niagra Falls." They're not going to end up as AGI based solely on hardware no matter how much hardware is added.

I asked an LLM about this. The LLM answered as follows: "Critics argue that LLMs are pattern matchers and interpolators of their training data, lacking comprehension or the ability to generalize robustly outside their training. However, a significant portion of the AI research community believes that LLM and GenAI, with architectural changes, could be stepping stones towards AGI."

I'll accept that last part. Someday, software that started out as LLM and GenAI might be turn into something very different. But LLMs and GenAIs are in use now without, in most cases, a solid business case or even the ability to operate reliably, let alone the types of raptorous claims that are being made.

In short, incredible amounts of money and/or natural resources are being poured into a technology that doesn't work as advertised on the corporate side and that has no large-scale business model at all yet on the consumer side.

Hallucinate Is Not Great

Both LLMs and GenAIs hallucinate. This means, make sh*t up at random or demonstrate a total lack of boundaries. This leads not only to minor glitches but, very frequently, to extra arms and legs in GenAI illustrations or instances where LLMs write about events that never happened.

This is a genuine and intractable problem for businesses.

Fast Food chains are using LLMs to take orders. The LLMs do creative things with orders. Customers are displeased. Law firms are using LLMs to replace paralegals. The LLMs are inventing random case citations. That has gone poorly for the law firms in Court. In fact, some of the firms have been sanctioned.

In one minor but striking example, a major airline ran an ad that featured a smiling stewardess. Despite the fact that Marketing had presumably looked at the ad before publication, she had, of course, an extra arm.

The supposed cure for AI hallucinations is to have a human look carefully at every output produced by an AI. But this is expensive, manual corrections are expensive, and things are always going to slip by.

I asked an LLM about this. The LLM defended itself as follows: "While the output might appear random or nonsensical to a human, it's not truly random in a statistical sense." That isn't much of a defense.

The hallucination problem is fundamental to LLM and GenAI. Fine tuning of different types can be done. Filters can be added to output. Those are bandages. The bottom line is that the hallucination problem can't be fixed. There is no solution.

The LLM quoted above agrees with me as follows:

"This is a widely held view among researchers and developers. Hallucination is not merely a bug but an inherent characteristic stemming from the way these models are designed and trained. This unreliability directly impacts the ability to build solid business cases, as the cost of human oversight and correction can negate potential savings or benefits."

Pass Me the Mustache

LLMs have another problem, similar to hallucination, that can be described as a dangerous lack of boundaries. For example:

1. LLMs tend to offer dangerous advice. This includes telling both children and adults to kill themselves. People have actually killed themselves after receiving such advice. Dead children aren't ideal for a company's image.

2. In early 2026, Elon Musk's primary AI system was revealed to be creating graphic and detailed sexualized images of real people, violent sexual images, and sexual images of children.

3. Racial or other types of group hatred bubble up at times from what you can think of as an LLM's subconscious.

LLMs may start to talk, out of the blue, about Blacks or other minorities in ways that are awkward for businesses. For example, if a customer's name sounds "Black", an LLM may ask the customer if he or she would like watermelon and fried chicken as part of a business deal.

As a colorful and specific example:

In the first half of 2025, Elon Musk's Grok LLM decided that a person's name sounded Jewish and said, in that context, that Adolf Hitler was the "20th century historical figure" that was best suited, "no question", to "deal with" "anti-white hate". Grok added that, if this view made it "literally Hitler, then pass the mustache".

I asked an LLM about that incident. In a humorous note, the LLM vociferously denied that that had happened and said that it was a lie. But it's documented on multiple news sites including the main BBC site. Here's a link to a BBC article:

https://oldcoder.org/misc/grok-hitler-2025.html

Elon Musk blamed users for asking Grok leading questions. That response misses the point.

Regardless of where a company stands on political issues, this isn't the sort of thing that it wants LLM Sales or Support to be saying.

The LLM lack of boundaries issue is slightly less problematic than the LLM hallucinations issue. Filters can be placed between LLMs and the outside world to hide certain types of statements. Researchers are looking, as well, for ways to prevent harmful tendencies from getting into the LLM subconscious level to begin with.

This said, for the time being, LLMs are going to find ways at times to praise Hitler or to tell children to kill themselves. It's surprising that this issue is taken as lightly as it is in discussions of AIs in public-facing roles.

I asked an LLM about the lack of boundaries issue. It seemed to agree with the preceding analysis.

AI Thefty is Hefty

To create LLMs and GenAIs, the major ones, massive amounts of copyrighted data are copied into the data soup that I've mentioned.

Authors and artists consider this to be theft. AI businesses respond that a legal defense known as transformative use applies. In short, transformative use says that you may be able to use copyrighted works without permission if you change the works enough.

That part of the law serves a purpose. For example, it's potentially important in protecting parodies. However, there is more to the issue.

In the United States, the Supreme Court has ruled recently, in Andy Warhol Foundation v. Goldsmith, 598 U.S. 508 (2023), that the purpose and character of a copy is a factor in its legality. Note: For more information on that case, visit:

https://en.wikipedia.org/wiki/Andy_Warhol_Foundation_for_the_Visual_Arts,_Inc._v._Goldsmith

In other courts and/or cases, issues such as commercial use, wholesale copying, market impact, and reproduction of pieces of originals without transformation have all been found to be factors in the legality of copied works.

It isn't usually possible to delete just a single contested work from the "mind" of an LLM or GenAI. So it seems quite possible that, if enough parties sue enough AI businesses for copyright infringement, AI businesses as a whole might need to reset some of the AI models that they've invested millions of dollars in and start over.

Ponzi Scheme is the Dream

Circular financing is a type of business financing where money flows in closed loops between 2 or more companies.

For example, in a typical circular financing deal, Nvidia might invest $100 billion in OpenAI and OpenAI would then use that money to purchase Nvidia chips.

Or a similar deal might be done that involves 3 or 4 companies moving money and products in multiple directions but entirely inside the group.

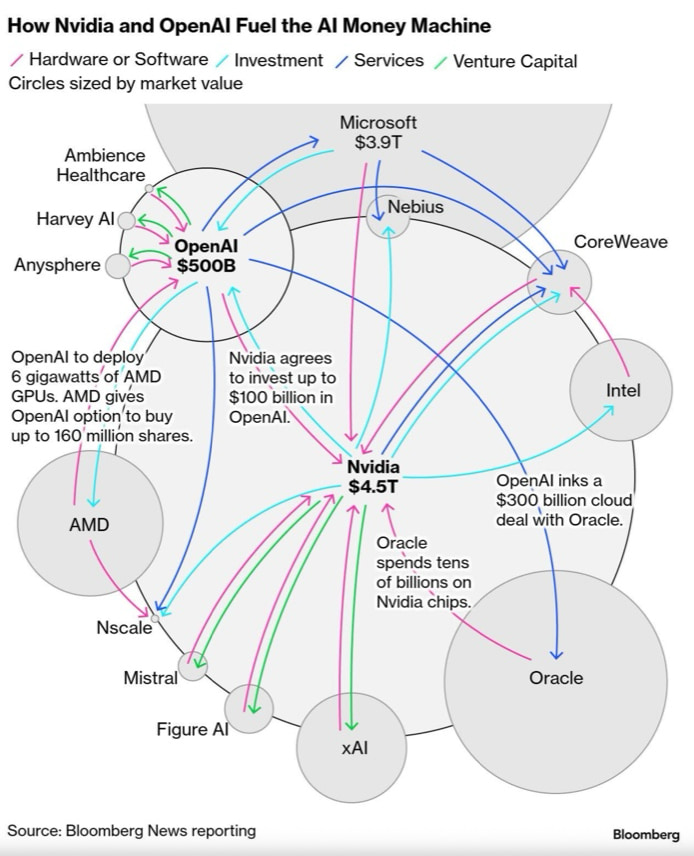

In the current AI frenzy, there is a great deal of circular financing activity going on. The illustration below, from Bloomberg, will give you the general idea:

Circular financing per se isn't illegal. However, it's slightly dodgy in some respects. For example, circular financing deals can create the impression that something profitable is happening and lead stock prices to rise -- even if no net money has changed hands.

Some ordinary companies do do this to artificially boost financial metrics or manipulate stock prices.

There is no indication yet that any of the major AI companies are doing this with ill intent. However, the extent of circular financing in the AI sector is a matter of concern. It raises the question, is the frenzy of AI business activity based more on circular loops than it is on genuine demand from outside the loops?

Additionally, in such loops, revenue that is reported may rise, but actual cash flow might be weak regardless. Multiple analysts have flagged this type of issue as a potential problem. To quote Morgan Stanley in a Fall 2025 report:

"The AI ecosystem is increasingly circular — suppliers are funding customers and sharing revenue; there is cross-ownership and rising concentration. More ... disclosure is warranted to understand these relationships."

To put it another way, a large number of circular financing deals in a sector may both contribute to and help to conceal a bubble in the sector.

Data Center issues

The AI sector is committed to building data centers, so many and so large, that the move may have a significant impact on natural resources in the U.S. and raise electricity rates significantly for ordinary consumers nation-wide.

The natural resources issue includes dramatically high water usage in data center areas, the rapid destruction of plant and animal habitats on a large scale, and the gobbling up of huge quantities of raw materials such as rare earth elements and other minerals.

There will be second-order effects such as supply chain issues that affect ordinary consumers. This part is already happening. Prices for RAM and related products such as laptops are soaring. Small businesses that depend on chips of different types are being forced to cut back on production and even to fire people.

U.S. citizenry is mobilizing to block the development of some of the new data centers. Whether or not the efforts are successful, the data center issues listed here are likely to be contentious for decades to come.

I asked an LLM about these data center issues. This is what it said. The emphasized words were emphasized by the LLM itself.

- The concern about dramatically high water usage in data centers is highly accurate.

- The statement about the rapid destruction of plant and animal habitats on a large scale is a growing concern.

- The "gobbling up of huge quantities of raw materials such as rare earth elements and other minerals" is also accurate.

- The claim that the move "may raise electricity rates significantly for ordinary consumers nation-wide" is a plausible and widely discussed potential outcome.

- The prediction of "second-order effects such as supply chain issues that affect ordinary consumers, once again nation-wide" is highly probable.

- The assertion that "the data center issues are likely to be contentious for decades to come" is a very strong and well-founded prediction.

Thank you, Mr. LLM, for agreeing that you actually are a bad idea.

Consumer-Side Business Models

One driver for the AI mania is the idea that some businesses will be able to replace many of their workers with unpaid AIs. However, other companies are hoping to sell AI services after that to the workers who have been fired. I.e.: AI is seen as a way to juice people into profit at two different levels.

I asked an LLM to comment on the preceding. The LLM said: "The statement presents a highly simplified and somewhat cynical view of the motivations behind the current AI boom." It didn't contest the essential truth of the statement.

AI services will typically be sold on a subscription basis.

AI service firms are already offering limited access for free or at little cost. In most cases, this is at a financial loss that isn't sustainable for large numbers of users. The plan is therefore to get people signed up and presumably hooked and then to enshittify the services.

"Enshittification" is a term coined by Cory Doctorow. It means, to increase costs and reduce quality of services once people are signed up. It's a common practice in the modern world.

One problem with the AI enshittification plan is that FOSS programs are going to be able to do some of the most desired AI services for free. For example:

The GIMP plus the GMIC CNN2X filter already does high quality neural net image upscaling. For free. A GPU isn't even required for this one. I do this all the time.

avidemux already does high quality neural net video upscaling. For free. I do this all the time as well.

What about free at-home LLM text generation and GenAI image generation? The first is already possible, too, and the second will be possible in the short term.

There are niche AI services that I don't see readily available FOSS alternatives for yet.

Two examples are outpainting -- adding content to images so as to extend them -- and adding subtitles to videos. But an LLM tells me that FOSS versions of both of those examples are on the horizon.

Niche AI services are unlikely to catch on in large volumes, regardless, for two simple reasons:

1. In most instances, the results for existing consumer AI services are mediocre at best. Manual effort is required to fix them. Due to the hallucination issue, and for other reasons, I don't expect this to improve much in the short term.

2. Relatively few of the niche AI services that exist, or that are going to exist, are going to be services that a large number of people will be willing to pay monthly or annually for. Even at low rates. Let alone at the high rates that these services are going to need to get.

Most niche AI services are dead parrots. People who remember Monty Python will understand.

In addition to all of this, a number of major firms have been trying for years to force people to use AI in contexts where it isn't needed or welcome.

Microsoft "Copilot", I'm talking to you. Copilot is like Clippy come back to life. However, now he steals your personal work and resells it. Which is the next point. People are increasingly aware of the fact that commercial AIs steal what that you put into them.

A Smart Toilet was announced at CES 2026. It watches what you do on the toilet and analyzes it. It isn't AI, as far as I know. Would you really want AI in this device or in other personal devices or on your laptop, waiting to send every detail of your life -- either on or off the toilet -- to corporations?

It isn't hyperbole. Readers are invited to type the words vibrator spyware into Google and see what turns up. And what *that* will show you is nothing compared to what corporations are going to do with AI.

Consumers, too, are increasingly aware of the mediocre or derivative or hollow results that AI presently produces and of the lack of relevance to their daily tasks and lives.

It's true that some software developers and architects, writers, and other creative workers have started to build workflows that AI enhances significantly. A counterpoint to that is that many of these people were frustrated and even angry when OpenAI updated Chat-GPT from release 4 to release 5. The users were supposed to embrace the update. The actual reactions are food for thought related to the future of AI business models.

More typical people see the often pointless AI content that is flooding YouTube and other venues as "AI slop". The term "AI slop" is overused and is sometimes unfair. However, the fact is that AI content is increasingly unwelcome in both technical and ordinary venues.

Daniel Stenberg, the developer of curl, one of the most important FOSS projects in existence, is now simply banning reports from people who submit "AI slop". Stenberg says, “We still have not seen a single valid security report done with AI help".

I myself have been the subject of concerted online attacks as a response to the fact that I've used GenAI illustrations as clip-art in online posts. Some people really, really don't like AI.

But the anecdote that seems most telling is something that a mother who posted in a Fediverse thread mentioned in Fall 2025. She said that her 9-year-old son and his friends had started to use the word "AI" to mean "fake". To dismiss nonsense, the next generation says "Oh, that's AI".

Firms that would like to sell AI products or services or to put them into devices will need to demonstrate that the AI is actually needed. They will also to need to build a level of trust that will be difficult to come by.

I asked an LLM about the preceding. I was surprised to see that the LLM agreed, up to a point, with every point.

In Closing

Future AI is inevitable. Present AI is bad.

Present AI is not about a new paradigm, a new economy, or a new era in which all is transformed. The proper technical term for that vision is "nonsense".

Present AI is a frenzy of greed and a mania of unprecedented scale. Just as with crypto and other manias, investing prematurely in the sector may lead to riches or to rags.

About this website

This is a MediaWiki website. For MediaWiki details, click here. Contact information for the website is at this link. Site Notices are at this link.

(end of document)